Pd Read Parquet

Pd Read Parquet - Import pandas as pd pd.read_parquet('example_fp.parquet', engine='fastparquet') the above link explains: Df = spark.read.format(parquet).load('parquet</strong> file>') or. Right now i'm reading each dir and merging dataframes using unionall. Parquet_file = r'f:\python scripts\my_file.parquet' file= pd.read_parquet (path = parquet… Write a dataframe to the binary parquet format. Web pandas 0.21 introduces new functions for parquet: Connect and share knowledge within a single location that is structured and easy to search. Import pandas as pd pd.read_parquet('example_pa.parquet', engine='pyarrow') or. Web 1 i've just updated all my conda environments (pandas 1.4.1) and i'm facing a problem with pandas read_parquet function. Web sqlcontext.read.parquet (dir1) reads parquet files from dir1_1 and dir1_2.

Web sqlcontext.read.parquet (dir1) reads parquet files from dir1_1 and dir1_2. This will work from pyspark shell: A years' worth of data is about 4 gb in size. Web pandas 0.21 introduces new functions for parquet: Web to read parquet format file in azure databricks notebook, you should directly use the class pyspark.sql.dataframereader to do that to load data as a pyspark dataframe, not use pandas. Web the us department of justice is investigating whether the kansas city police department in missouri engaged in a pattern of racial discrimination against black officers, according to a letter sent. Web reading parquet to pandas filenotfounderror ask question asked 1 year, 2 months ago modified 1 year, 2 months ago viewed 2k times 2 i have code as below and it runs fine. I get a really strange error that asks for a schema: Connect and share knowledge within a single location that is structured and easy to search. Web pandas.read_parquet(path, engine='auto', columns=none, storage_options=none, use_nullable_dtypes=_nodefault.no_default, dtype_backend=_nodefault.no_default, filesystem=none, filters=none, **kwargs) [source] #.

Web pandas.read_parquet(path, engine='auto', columns=none, storage_options=none, use_nullable_dtypes=_nodefault.no_default, dtype_backend=_nodefault.no_default, **kwargs) [source] #. Web sqlcontext.read.parquet (dir1) reads parquet files from dir1_1 and dir1_2. This function writes the dataframe as a parquet. Connect and share knowledge within a single location that is structured and easy to search. Df = spark.read.format(parquet).load('parquet</strong> file>') or. Import pandas as pd pd.read_parquet('example_fp.parquet', engine='fastparquet') the above link explains: These engines are very similar and should read/write nearly identical parquet. Web the us department of justice is investigating whether the kansas city police department in missouri engaged in a pattern of racial discrimination against black officers, according to a letter sent. Right now i'm reading each dir and merging dataframes using unionall. You need to create an instance of sqlcontext first.

How to read parquet files directly from azure datalake without spark?

For testing purposes, i'm trying to read a generated file with pd.read_parquet. This function writes the dataframe as a parquet. Web to read parquet format file in azure databricks notebook, you should directly use the class pyspark.sql.dataframereader to do that to load data as a pyspark dataframe, not use pandas. Connect and share knowledge within a single location that is.

Modin ray shows error on pd.read_parquet · Issue 3333 · modinproject

Web 1 i've just updated all my conda environments (pandas 1.4.1) and i'm facing a problem with pandas read_parquet function. Df = spark.read.format(parquet).load('parquet</strong> file>') or. Parquet_file = r'f:\python scripts\my_file.parquet' file= pd.read_parquet (path = parquet… This will work from pyspark shell: Web pandas 0.21 introduces new functions for parquet:

python Pandas read_parquet partially parses binary column Stack

This function writes the dataframe as a parquet. Right now i'm reading each dir and merging dataframes using unionall. Web 1 i've just updated all my conda environments (pandas 1.4.1) and i'm facing a problem with pandas read_parquet function. Web pandas 0.21 introduces new functions for parquet: Df = spark.read.format(parquet).load('parquet</strong> file>') or.

Parquet Flooring How To Install Parquet Floors In Your Home

Web the data is available as parquet files. You need to create an instance of sqlcontext first. Web pandas 0.21 introduces new functions for parquet: Web to read parquet format file in azure databricks notebook, you should directly use the class pyspark.sql.dataframereader to do that to load data as a pyspark dataframe, not use pandas. From pyspark.sql import sqlcontext sqlcontext.

PySpark read parquet Learn the use of READ PARQUET in PySpark

Web reading parquet to pandas filenotfounderror ask question asked 1 year, 2 months ago modified 1 year, 2 months ago viewed 2k times 2 i have code as below and it runs fine. Parquet_file = r'f:\python scripts\my_file.parquet' file= pd.read_parquet (path = parquet… Write a dataframe to the binary parquet format. Web pandas.read_parquet(path, engine='auto', columns=none, storage_options=none, use_nullable_dtypes=_nodefault.no_default, dtype_backend=_nodefault.no_default, filesystem=none, filters=none, **kwargs).

Parquet from plank to 3strip from MEISTER

This will work from pyspark shell: Web the us department of justice is investigating whether the kansas city police department in missouri engaged in a pattern of racial discrimination against black officers, according to a letter sent. Right now i'm reading each dir and merging dataframes using unionall. Any) → pyspark.pandas.frame.dataframe [source] ¶. Is there a way to read parquet.

pd.read_parquet Read Parquet Files in Pandas • datagy

For testing purposes, i'm trying to read a generated file with pd.read_parquet. Web 1 i've just updated all my conda environments (pandas 1.4.1) and i'm facing a problem with pandas read_parquet function. Web sqlcontext.read.parquet (dir1) reads parquet files from dir1_1 and dir1_2. Any) → pyspark.pandas.frame.dataframe [source] ¶. Import pandas as pd pd.read_parquet('example_fp.parquet', engine='fastparquet') the above link explains:

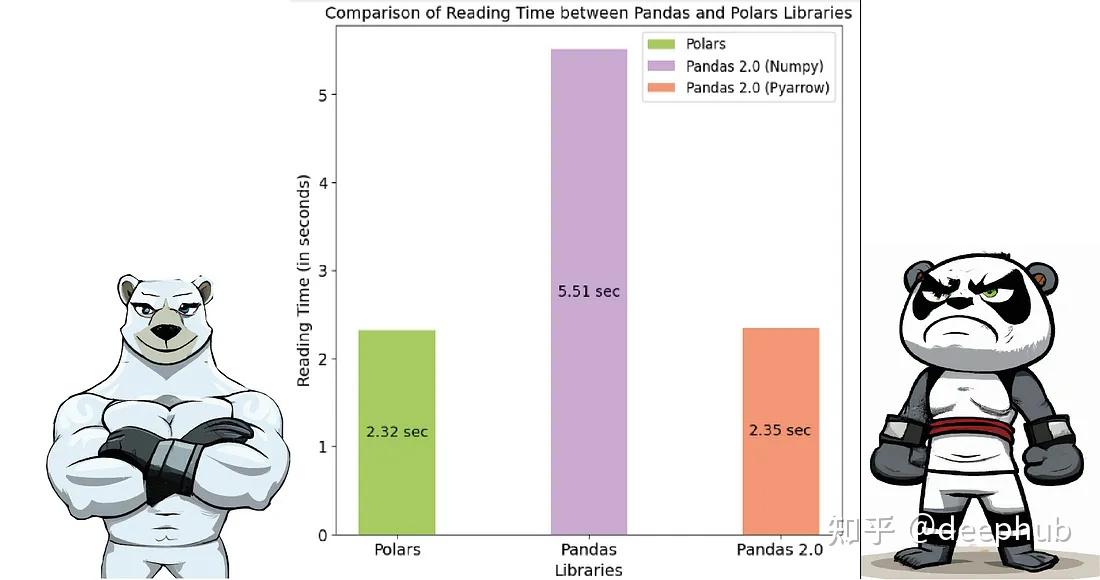

Pandas 2.0 vs Polars速度的全面对比 知乎

Web reading parquet to pandas filenotfounderror ask question asked 1 year, 2 months ago modified 1 year, 2 months ago viewed 2k times 2 i have code as below and it runs fine. Import pandas as pd pd.read_parquet('example_pa.parquet', engine='pyarrow') or. Connect and share knowledge within a single location that is structured and easy to search. Web pandas.read_parquet(path, engine='auto', columns=none, storage_options=none,.

Spark Scala 3. Read Parquet files in spark using scala YouTube

Parquet_file = r'f:\python scripts\my_file.parquet' file= pd.read_parquet (path = parquet… You need to create an instance of sqlcontext first. Web pandas.read_parquet(path, engine='auto', columns=none, storage_options=none, use_nullable_dtypes=_nodefault.no_default, dtype_backend=_nodefault.no_default, **kwargs) [source] #. From pyspark.sql import sqlcontext sqlcontext = sqlcontext (sc) sqlcontext.read.parquet (my_file.parquet… Any) → pyspark.pandas.frame.dataframe [source] ¶.

How to resolve Parquet File issue

Import pandas as pd pd.read_parquet('example_fp.parquet', engine='fastparquet') the above link explains: Is there a way to read parquet files from dir1_2 and dir2_1. Web sqlcontext.read.parquet (dir1) reads parquet files from dir1_1 and dir1_2. This function writes the dataframe as a parquet. Web the data is available as parquet files.

Web Pandas.read_Parquet(Path, Engine='Auto', Columns=None, Storage_Options=None, Use_Nullable_Dtypes=_Nodefault.no_Default, Dtype_Backend=_Nodefault.no_Default, **Kwargs) [Source] #.

Is there a way to read parquet files from dir1_2 and dir2_1. Web the us department of justice is investigating whether the kansas city police department in missouri engaged in a pattern of racial discrimination against black officers, according to a letter sent. For testing purposes, i'm trying to read a generated file with pd.read_parquet. Connect and share knowledge within a single location that is structured and easy to search.

A Years' Worth Of Data Is About 4 Gb In Size.

Right now i'm reading each dir and merging dataframes using unionall. Web 1 i've just updated all my conda environments (pandas 1.4.1) and i'm facing a problem with pandas read_parquet function. Web pandas 0.21 introduces new functions for parquet: You need to create an instance of sqlcontext first.

Any) → Pyspark.pandas.frame.dataframe [Source] ¶.

Web dataframe.to_parquet(path=none, engine='auto', compression='snappy', index=none, partition_cols=none, storage_options=none, **kwargs) [source] #. I get a really strange error that asks for a schema: Import pandas as pd pd.read_parquet('example_pa.parquet', engine='pyarrow') or. These engines are very similar and should read/write nearly identical parquet.

Web Pandas.read_Parquet(Path, Engine='Auto', Columns=None, Storage_Options=None, Use_Nullable_Dtypes=_Nodefault.no_Default, Dtype_Backend=_Nodefault.no_Default, Filesystem=None, Filters=None, **Kwargs) [Source] #.

Web to read parquet format file in azure databricks notebook, you should directly use the class pyspark.sql.dataframereader to do that to load data as a pyspark dataframe, not use pandas. Web reading parquet to pandas filenotfounderror ask question asked 1 year, 2 months ago modified 1 year, 2 months ago viewed 2k times 2 i have code as below and it runs fine. Web 1 i'm working on an app that is writing parquet files. From pyspark.sql import sqlcontext sqlcontext = sqlcontext (sc) sqlcontext.read.parquet (my_file.parquet…